<work in progress, still being updated>

Introduction

The growing field of AI Content Generation has raised concerns for artists and creators. This project aims to showcase how artists can utilize AI as a tool, rather than a competitor, for generating dynamic art in media they have not worked in before. The study explores the potential for creating dynamic digital content by communicating with AI in the perspective of an artist with no programming background, in the form of machine-generated code.

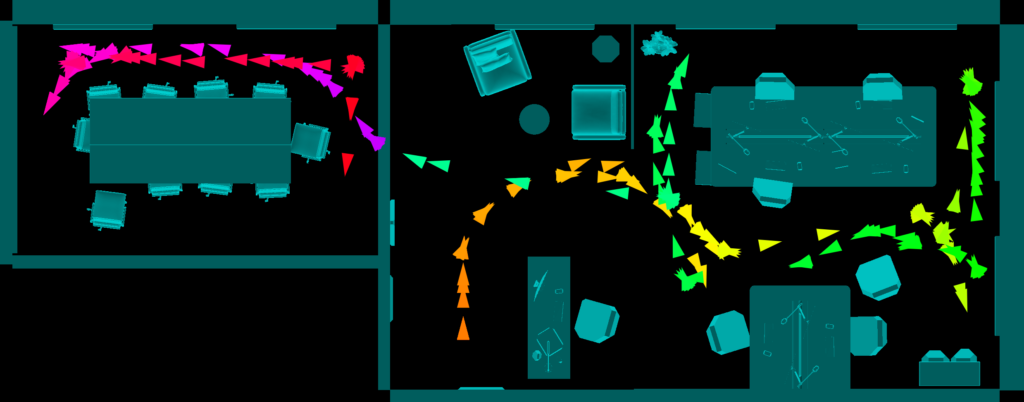

The result is an interactive website (aniRGB.yih0.com) that visualizes user location data gathered from research studies. When different colored lights illuminate the generated image, specific sections are concealed, creating an animation effect as the hues change continuously. The website features an animation mode to simulate this ongoing hue transition, bringing the image to life to facilitate research data analysis on-the-go.

In this document I will talk about the result and development progress, as well as how I used the GPT-4 model as a tool, acting as a dedicated programmer for dynamic artistic creation.

Implementation

Requirement Document

At the start of this project, I created a detailed requirements description document of this web app. It describe it’s goal, capabilities, and what the user provides, covering the logistics of how things should work. I then gave the GPT-4 LLM (Large Language Model) this document and asked it generate a series of code files to create the website.

Please see the requirement document in the sample conversation.

Workflow

We followed an iterative development process instead of trying to get everything perfect in the first try. This means to give the AI our existing codebase and ask for improvements, new features, or fixes. Surprisingly, due to the completeness of the requirement document and GPT-4’s improved logic and reasoning compared to GPT-3, the model produced very few bugs and handled tricky situations well without the artist’s intervention or even knowledge of those potential issues. When there is an issue (happened only 2 times), I would describe what the issue behaves like, how it should behave, and provide the source code for a fix. The AI is generally capable of fixing such issues in one try.

Persistence

As some might know, the ChatGPT website supports having the AI remember previous conversations. This works by supplying the whole chat history to the AI. I did not use this structure because:

- The AI has a context length limit. If too much information was presented, it would discard the earliest parts which would include the requirements document, thus altering its understanding of the project.

- Irrelevant information in the history would interfere with the AI’s capability of understanding this project correctly. For example, if a feature request existed but the feature got removed, the AI might still remember the feature thus generate less efficient or even incorrect code.

- Cost. Supplying the GPT-4 model with detailed conversation history through the OpenAI API would cost a lot (~$0.03 per 750 words).

My solution was to provide the requirements document, the current codebase, and a change history to the codebase provided by the AI in the last conversation. This way, a static amount of information was presented each time, making the response accurate, efficient, and cheap.

Limitations

Many limitations exist for an artist without programming knowledge to work with AI for dynamic art generation. For example, the artist might not be able to clearly describe what they want in language that is understandable and actionable for the AI. On the technical side, AI generated logic in my case could be further optimized (but if you did specifically ask for optimization, it would probably be able to do a few things)

Sample Conversation

This is a conversation where I ask the AI to implement two new features and fix an unwanted behavior.

I asked that the AI change the code to

- Allow users to select between map presets to have map background images and it’s in-game dimensions predefined

- Make it so that an image can be generated even when a new Background Image was not provided

- Allow one full animation cycle to be exported to a video and a svg file with two buttons

Results

The result is a website (aniRGB.yih0.com, instructions: xu.yih0.com/aniRGB) capable of rendering movement information based on a time index. It can render one single image with all movement information, or display an animation of arbitrary length in time that shows movement as time goes on. It takes a csv file that contains the frame number, location, and rotation for each of the frames as an input.

We implemented an opacity filter to create cleaner visuals, but it is not how light works in real life. Basically, each arrow were given an opacity based on how far they are from the arrow that should be fully visible – to remove unnecessary clutter in the view for data analysis purposes. This can be turned off, and as a result the map looks more cluttered, but this is true to how lights would work in real life.

Impact

This project is already making a positive impact on humanities related research, and I believe the creation of this tool is a sign that human artists can work with AI to creatively collaborate on novel ways of artistic expression. Multiple professors at Worcester Polytechnic Institute and University of Massachusetts Lowell have spoken highly about this project and are using it for research visualization.

References

- The VR power wheelchair training simulation is a game for accessibility research called “WheelUp!” that I’m working on: GP2P/Wheel-Up (github.com)

- The First Person Shooter game is a game for network latency compensation research that I’m working on: FPSci: Network Latency Compensation | ShowFest (wpi.edu)

- OpenAI API Pricing: Pricing (openai.com)